Click here for our project website.

CSC212: Human-Computer Interaction

In fall 2024, I took CSC212: Human-Computer Interaction at the University of Rochester, taught by Professor Yukang Yang. Throughout the semester, we explored the full spectrum of the app development process, from needfinding and contextual inquiry to rapid prototyping, usability testing, and evaluation. We studied foundational topics like Fitts’ Law, the model human processor, and ethical UX design, while also engaging with cutting-edge areas like AR/VR, AI interfaces, remote collaboration, and digital fabrication.

The course contained influential readings and real-world examples including Doug Engelbart’s “Mother of All Demos” and modern usability heuristics, and there were a number of guest lectures covering topics like accessibility, wearables, and learning technologies. In addition, Professor Yang was understanding, supportive, and passionate—he was always willing to meet with students and clearly invested in helping us succeed. His enthusiasm for HCI made the class both approachable and inspiring.

Overall, CSC212 helped me see the design process from the user’s perspective, introduced me to new tools and ways of thinking, and deepened my appreciation for how people interact with technology in everyday life. It was a fun, fast-paced, and intellectually rewarding course that I’d recommend to anyone interested in the intersection of design, computing, and human behavior.

Our Project

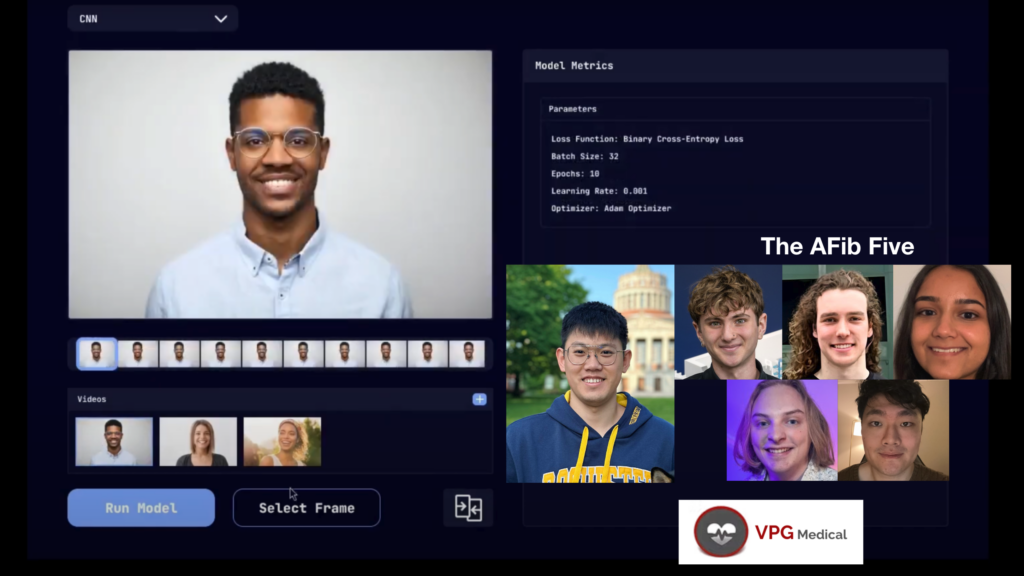

For the final project, we demonstrated our mastery of the full app development process by forming teams of four to five students to create app-based solutions to a real-world problem. My group, The AFib Five, decided to partner with VPGTechnologies, a local emerging company led by CEO Jean-Phillipe Courdec, to tackle a real-world healthcare challenge. VPG is developing HealthKam AFib, a non-invasive mobile tool that uses facial video and photoplethysmography (PPG) technology to detect atrial fibrillation (AFib), a common and serious heart condition.

However, the accuracy of PPG readings can be significantly affected by skin tone due to variations in melanin content. Current PPG systems are often calibrated primarily on individuals with lighter skin tones, resulting in reduced accuracy for those with darker complexions. This discrepancy can lead to inequities in health outcomes, particularly for underrepresented populations.

To address this issue, our team developed a tool that evaluates the efficacy of PPG-based AFib detection across different skin tones. VPGTechnologies provided us with access to facial video data from a medical study on AFib patients, which allowed us to train and test our models—ensuring that we could explore these disparities rigorously (with full respect for the patients’ privacy, of course).

We followed the full Human-Computer Interaction (HCI) design process throughout the project. During Needfinding, we interviewed engineers at VPG to understand the technical and clinical constraints of the current system. We then developed prototypes, including Figma wireframes and interactive software demos that visualized the performance of PPG detection across diverse skin tones.

For implementation and evaluation, we trained and tested two computational models. One used a convolutional neural network (CNN), and the other analyzed the color space of the region of interest (ROI) around the patient’s face in the video. These models were integrated into a comparative interface that helped visualize detection accuracy by skin tone, enabling VPG to better understand where their current system may fall short and where improvements can be made.

Overall, this collaboration was technically engaging and deeply meaningful. It was a valuable opportunity to apply HCI principles to a real-world healthcare application that could lead to more equitable health monitoring for all.